How AI is Changing How Programmers Work (and What It Means for the Rest of Us)

I came across a fascinating study from Anthropic about how their engineers use AI in their work, and honestly, it hit close to home. Here are the numbers: productivity increased by 50%, and Claude is used in 60% of work tasks. But the most interesting part isn’t just the speed-up, it’s the fundamental transformation of the work itself.

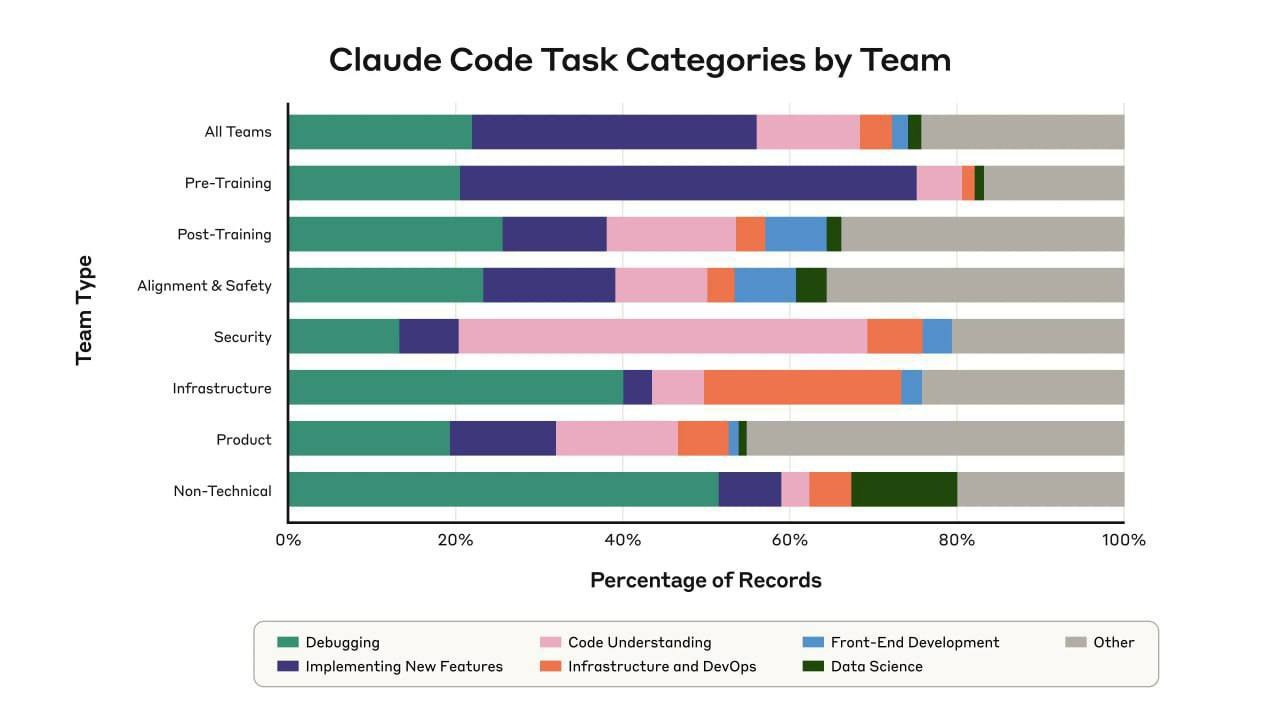

Engineers are becoming full-stack specialists, easily venturing into areas they previously avoided touching. A backend engineer building complex UIs. Researchers creating data visualizations. Security teams analyzing unfamiliar code. This is not just about doing the same work faster, it’s about doing entirely different work.

The Productivity Paradox

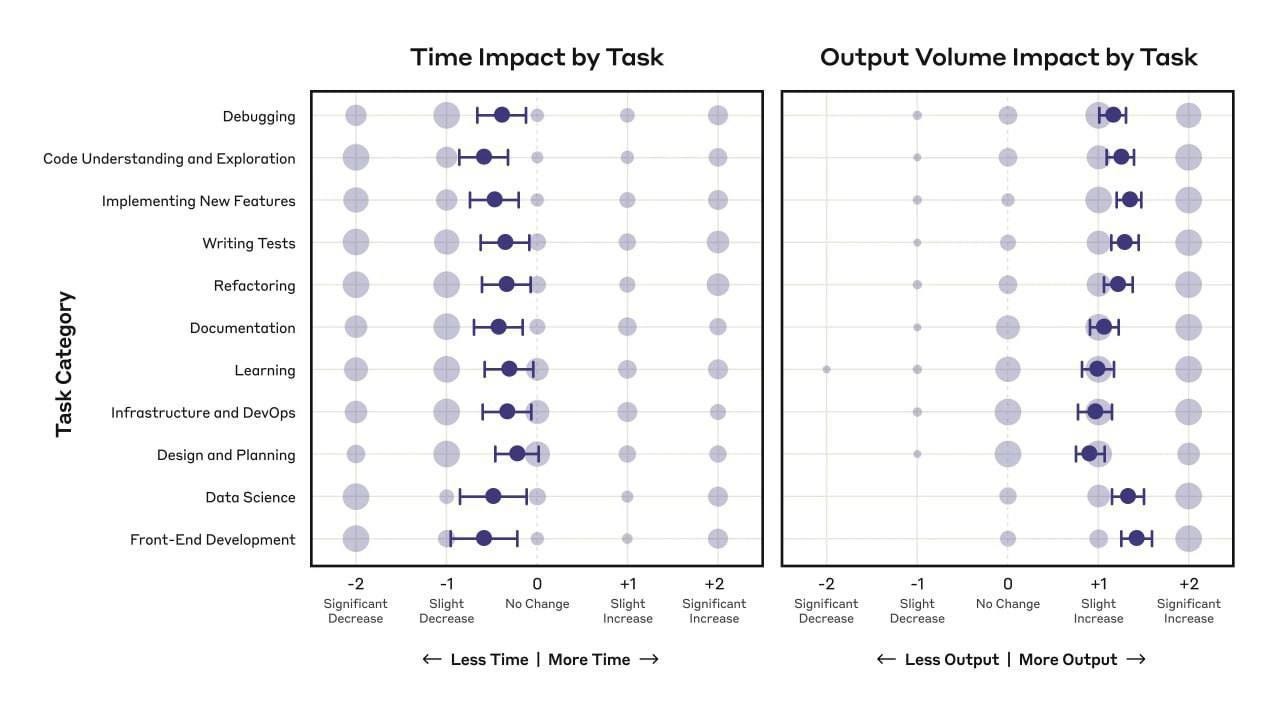

While productivity is clearly up, the picture is more nuanced than “AI makes everything faster.” When you dig into the data, engineers report spending slightly less time per task category, but producing considerably more output volume.

Think about that for a second. You’re not necessarily finishing your debugging faster, you’re debugging way more things. You’re not writing each feature quicker, you’re writing more features, period.

And here’s the kicker: 27% of Claude-assisted work consists of tasks that wouldn’t have been done otherwise. We’re talking about overnight demos, interactive dashboards, exploratory work that would never have been cost-effective manually. One researcher described running multiple versions of Claude simultaneously, each exploring different approaches: “People tend to think about super capable models as a single instance, like getting a faster car. But having a million horses… allows you to test a bunch of different ideas.”

The Hidden Costs Nobody Talks About

Obviously, productivity gains come with new challenges, and this is where the Anthropic research gets really honest.

Some engineers worry their skills might atrophy. When code is generated so easily, it becomes harder to truly learn something deeply. One engineer put it perfectly: “When producing output is so easy and fast, it gets harder and harder to actually take the time to learn something.”

I’ve noticed this myself. Using AI consciously while maintaining the ability to critically evaluate results is getting increasingly difficult, because the results often look really impressive at first glance. This is dangerous. As I’ve written before, one of the biggest mistakes in business is tolerance for mediocrity, and technology is inadvertently bringing more of it into our lives.

There’s also what researchers call the “paradox of supervision.” Effectively using Claude requires supervision. But supervising Claude requires the very coding skills that may atrophy from AI overuse. Think about that circular trap for a moment.

Some engineers are deliberately practicing without AI to stay sharp. Others argue we’re moving to higher levels of abstraction, similar to how we moved from assembly language to Python, and that this is progress, not loss.

The Social Fabric is Changing

Another point in the report hit me hard: the shift in social dynamics.

Previously, people would turn to senior colleagues for advice and now they turn to Claude. This reduces live communication and the quality of mentorship. One engineer admitted: “I like working with people and it’s sad that I ‘need’ them less now… More junior people don’t come to me with questions as often.”

And this is about the erosion of something fundamental to how we learn, grow, and build culture in organizations. When you ask Claude instead of your colleague, you get an answer but you lose the conversation, the context, the relationship. You lose the informal knowledge transfer that happens when someone explains not just what to do but why they made certain decisions years ago.

Some engineers even admit they feel like they’re “automating themselves out of a job” and aren’t sure what their future role will be.

What Engineers Are Actually Learning About AI Delegation

The Anthropic engineers have developed remarkably consistent intuitions about what to delegate to AI and what to keep for themselves. They delegate tasks that are:

Outside their expertise but low complexity (”I don’t know Git or Linux very well… Claude does a good job covering for my lack of experience”)

Easily verifiable (”It’s absolutely amazing for everything where validation effort isn’t large”)

Repetitive or boring (”The more excited I am to do the task, the more likely I am to not use Claude”)

Lower stakes (throwaway code, debugging, research scripts)

What they keep for themselves: high-level thinking, strategic decisions, design choices requiring “taste” or organizational context.

But here’s the thing - this boundary is constantly moving. One engineer compared it to adopting Google Maps: at first you only use it for routes you don’t know, then for routes you mostly know, and eventually for everything, even your daily commute. The same progression is happening with AI.

The Future Belongs to Strategic Thinkers

The developer’s role is evolving from a craftsman writing code to a strategist managing AI agents. This really resonates with my thoughts on how important it is to develop internal entrepreneurship within teams.

Look at the data: engineers are spending more time on high-level design and less on implementation details. They’re tackling increasingly complex tasks - average task complexity jumped from 3.2 to 3.8 on a 5-point scale in just six months. Claude Code now chains together 21 consecutive actions autonomously, up from 10 six months ago.

But complexity isn’t the same as strategic thinking. The winners will be those who can not just delegate tasks, but ask the right questions, see the bigger picture, and turn AI capabilities into real products.

This is where most organizations will fail. They’ll focus on the productivity gains while missing the fundamental shift in what skills matter. They’ll celebrate engineers writing less code without asking whether those engineers are thinking more strategically. They’ll tolerate mediocre AI-generated output because it’s “good enough” and fast, forgetting that “good enough” compounds into deep mediocrity over time.

What This Means for Your Business

If you’re running a team, here are the uncomfortable questions you need to ask:

Are your people developing judgment, or just learning to prompt? There’s a difference between someone who can get Claude to generate code and someone who can evaluate whether that code solves the right problem in the right way.

Are you preserving the social fabric that enables knowledge transfer? When junior engineers stop asking senior engineers questions, you’re not just losing mentorship but you’re losing the next generation’s ability to become mentors themselves.

Are you raising your bar or lowering it? Just because AI can do something doesn’t mean the result is excellent. Are you using AI to achieve things that were previously impossible, or are you using it to accept things that are merely adequate?

Are you building entrepreneurial thinking into your team? The engineers who will thrive aren’t the ones who write the most code - they’re the ones who can see opportunities, make strategic bets, and orchestrate resources (including AI) to build something meaningful.

The Uncomfortable Truth

Some Anthropic engineers expressed a conflict between short-term optimism and long-term uncertainty. “I feel optimistic in the short term but in the long term I think AI will end up doing everything and make me and many others irrelevant,” one stated bluntly.

Others were more pragmatic: “The important thing is to just be really adaptable.”

Here’s my take: the future belongs to those who know how to think and not to those who write code.

AI is incredible at execution. It’s getting better at it every day. But execution without strategic thinking is just an expensive activity. The gap between activity and achievement is judgment: knowing what to build, why to build it, and how to know if it’s working.

This shift is happening faster than most people realize.

What are you doing to ensure your team isn’t just working faster, but thinking better?

Thanks for writing this, it clarifies a lot. Your insight on expanding capabilities, not just speed, is brilliant! It's like finding new Pilates routines with AI – total game changer.