The Shadow AI Revolution in Medicine: What 1,000+ Physicians Really Think

Here’s something that should worry every hospital administrator: 67% of physicians are using AI daily in their practice. And most of them are paying for it themselves.

We’re witnessing a classic case of bottom-up innovation in one of the world’s most conservative industries. While healthcare organizations hold endless meetings about AI governance frameworks, their physicians have already moved on. They’re using personal ChatGPT accounts, paying for Claude subscriptions with their own credit cards, and building workflows around tools their employers don’t even know exist.

The 2025 Physicians AI Report surveyed over 1,000 physicians across 106 specialties, and the data tells a story I’ve seen play out in every industry I’ve worked with: the gap between what frontline professionals need and what organizations deliver creates a shadow market. In medicine, that market is already massive.

The Numbers

Here’s the paradox that should grab your attention: 84% of physicians say AI makes them better at their jobs. 78% believe it improves patient health. 42% say AI adoption makes them more likely to stay in medicine.

And yet, 81% are dissatisfied with how their employers handle AI.

That’s not a technology problem. That’s a trust problem.

The issue isn’t adoption, physicians are already there. The issue is control. 71% of physicians report having little to no influence on which AI tools their organizations adopt. Nearly half say their employer’s communication about AI is poor. And 89% believe they should receive dedicated funding for AI tools (most want between $500-1000 annually, though some are asking for $10,000+).

Think about what this means: physicians trust AI enough to pay for it themselves, but they don’t trust their organizations to choose the right tools or deploy them properly.

Why OpenEvidence Wins

The fragmented tool landscape tells you everything about where we are. The report identified 71 unique AI tools in active use. OpenEvidence leads at 37%, but that means 63% of the market is scattered across dozens of other solutions.

What makes OpenEvidence the leader isn’t sophisticated technology—it’s physician verification and focus on vetted sources. In an industry where the cost of error can be measured in lives, trust infrastructure matters more than features.

But here’s what’s interesting: ChatGPT comes in second at 15.6%. A general-purpose tool that physicians have adapted to their specific needs, despite it having no medical specialization whatsoever. This should tell you something about the power of flexibility and user control versus purpose-built solutions that don’t actually solve the right problems.

The specialized tools that are succeeding - Abridge (4.9%), DAX Copilot, Heidi, Freed - share a common characteristic: they solve one very specific problem really well. Usually documentation.

The Documentation Problem Nobody Wants to Talk About

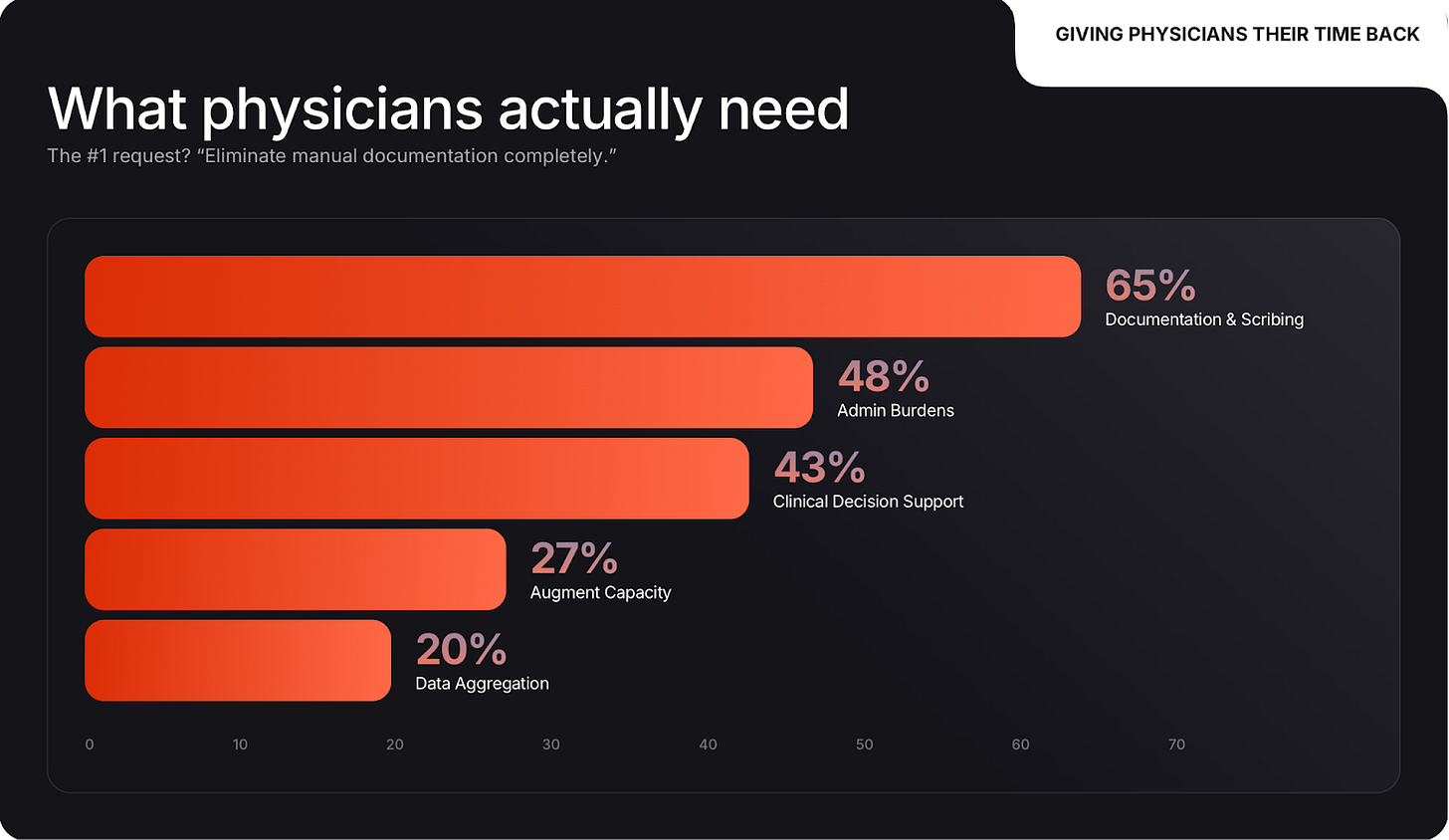

Ask physicians what they want from AI and the answer is embarrassingly unglamorous: eliminate paperwork.

65% named documentation and administrative burden automation as their top priority. That’s one and a half times more important than clinical decision support (43%). The dream isn’t diagnostic AI or predictive analytics. The dream is “give me back the hours I lost to inbox management.”

This reminds me exactly of what I see in pharma and manufacturing. The most successful implementations are the ones that remove friction from daily work. Abridge’s value proposition is almost comically simple: put down your phone, record the appointment, get a structured medical record. But it solves a massive pain point.

The gap between what AI vendors pitch and what physicians actually need is enormous. While companies demo sophisticated diagnostic algorithms, physicians are drowning in administrative tasks that AI could handle today.

The Control Problem

Here’s where it gets really interesting. The physicians demanding more influence aren’t fresh graduates experimenting with new tech. They’re experienced professionals with 15-20 years of practice, working across all settings: hospitals, private practice, academic medicine.

These are people who know what works. And they’re being systematically excluded from decisions about which tools they’ll be required to use.

67% say having more influence over AI tool selection would increase their job satisfaction. When physicians choose their own AI tools, 95% of their colleagues have neutral or positive reactions. But when tools are imposed from above? That number drops dramatically.

Listen to what physicians actually say: “Despite physician productivity gains from AI tools, physicians will have a higher patient volume to care for with no proportional increase in compensation. The C-suite is incentivized to use AI as a cost-cutting strategy.”

Another: “The most sophisticated AI will end up in the hands of third-party payers and bureaucracy, not physicians.”

They’re not afraid AI will make them obsolete. They’re afraid AI will be used to extract more work from them while benefiting everyone except the physicians and patients.

The Shadow IT Problem in Healthcare

The scale of shadow AI usage should be a wake-up call. Physicians are paying personal subscription fees to ChatGPT, Claude, Grok, and Perplexity. They’re routing patient information through tools their compliance departments would have heart attacks over if they knew.

Why? Because the alternative is worse. The officially sanctioned tools are either non-existent, inadequate, or selected by administrators who’ve never treated a patient.

This exact pattern played out in enterprise software for years. Employees used personal Dropbox accounts because IT couldn’t provision file sharing fast enough. They bought their own SaaS subscriptions because the approved vendor list was three years out of date. Eventually, smart organizations realized they needed to meet their employees where they were, not where the procurement process wanted them to be.

Healthcare is having that same reckoning right now. The difference is the stakes are higher. Patient privacy, regulatory compliance, and clinical outcomes are all in play.

What Physicians Actually Want

Strip away all the noise and physicians are asking for four things:

Control. Not complete autonomy, but meaningful input into which tools they’ll use daily. They want to be part of the decision, not just informed after the fact.

Transparency. Where does the AI come from? How does it work? What are its limitations? Who’s liable when it’s wrong? These aren’t unreasonable questions.

Time back. Automate the bureaucratic nonsense so they can focus on patients. One physician put it perfectly: “AI smooths out the clunkiness of present-day EMR systems and restores the physician-patient relationship.”

Alignment of incentives. If AI makes physicians more productive, that benefit should be shared, not just captured by administrators to increase patient volume without increasing compensation.

When you read through the hundreds of physician comments in the report, a theme emerges: they’re not asking for the moon. They want tools that solve real problems, transparency about how those tools work, and a voice in decisions that affect their daily practice.

The Classic Innovation Adoption Pattern

We’re watching a textbook example of how innovation penetrates conservative industries.

First, individual practitioners experiment on their own. They find tools that work and share them with trusted colleagues. Use spreads through informal networks faster than official channels.

Second, organizations notice the gap between official policy and actual practice. Some adapt. Others double down on control and drive the shadow usage further underground.

Third, regulatory and competitive pressure forces broader adoption. But by this point, the organizations that moved early have already captured the benefits and built the institutional knowledge.

Healthcare is somewhere between stage one and stage two right now. 67% daily usage means we’re past the early adopter phase. But 81% dissatisfaction with organizational approach means most healthcare organizations haven’t figured out how to properly support and channel that adoption.

The question isn’t whether AI will transform medicine. Physicians have already answered that - they’re using it daily and report it makes them better at their jobs. The question is whether organizations will adapt to this reality or whether the gap will keep growing until something breaks.

What Needs to Happen

The path forward for organizations is to:

Give physicians funding and agency. Start with modest stipends ($500-1000/year) that physicians can use for AI tools they find valuable. Track what they choose. Learn from their decisions.

Include physicians in procurement. Not a token representative on a committee. Actual working physicians with a meaningful voice in which enterprise tools get adopted.

Focus on documentation and administrative relief. These aren’t sexy AI applications, but they deliver immediate ROI in physician satisfaction and time saved. Build from there.

Accept that the landscape will be fragmented. Different specialties have different needs. Emergency medicine physicians need different tools than radiologists. Stop trying to force everyone onto a single platform.

Move faster. The 81% dissatisfaction rate exists because organizations are too slow. By the time a tool makes it through procurement, physicians have already found and adopted three alternatives on their own.

It’s all about healthcare organizations to trust the clinical judgment of their physicians enough to give them agency over their own tools. Every day that gap exists, the shadow AI market grows larger, more entrenched, and harder to bring back under proper governance.

The physicians are ready. The technology exists. The question is whether healthcare administration will move fast enough to close the gap before it becomes unbridgeable.